Observability: the new wave or another buzzword?

OH - "Observability - because devs don't like to do "monitoring" we need to package it in new nomenclature to make it palatable and trendy."

— Cindy Sridharan (@copyconstruct) July 28, 2017

I think you'll be hearing more about observability in 2018. In this post, I share why I believe the term has emerged and why you'll be hearing more about observability vs. monitoring.

Failing Softly

The human body is remarkably good at isolating soft failures. When I catch a cold, I'll have less energy. I'll be a bit grumpy. But, I'm still generally functioning: I can still work, eat, breath, and sleep.

Like the human body, our software systems have evolved over the past several years to fail hard less often:

Today, it's significantly easier for engineering teams of all sizes to prevent hard failures: we have great caching tools to prevent overloading a database, our servers and containers will automatically recover or take themselves out of rotation on failure, and a single microservice may break while the main app remains usable.

Failure is evolving

Soft, contained failures are good. For those in an oncall rotation, this means fewer interrupted sleep cycles and dinner dates. However, there's a drawback to building these more resilient, coordinated services: there are exponentially more ways for these systems to fail. Configuring alerts to handle every possible failure isn't maintainable.

Think about a traditional three-tier monolith: you may setup alerts on two key metrics: 95th percentile response time and error rate. If you receive an alert, very frequently the root cause is isolated to one of those three tiers (like the database).

Now, think about an app backed by several microservices: those services may reside on the same host (or may not), may share the same database (or may not), may reside in entirely different datacenters, or may even be owned by an outside vendor. Different teams likely own each of those services and choose the components they are most productive with, leading to massively heterogeneous systems.

When there are more ways for a system to fail, it takes more time to identify the cause of that failure. Most of the time spent resolving a performance issue is the investigation and not the actual implementation. These soft-failing systems can be more difficult to debug when things go wrong.

Failure is shifting to application code

The core infrastructure our applications rely on (databases, container orchestration, etc) have matured rapidly. Frequently, these are maintained by vendors that know these complicated systems better than us, alleviating us of their maintenance burden. As these services have evolved, the complexity debt has shifted to our application code and how our separate services interact. When an oncall engineer receives an alert, the end result is frequently a change to application code and not restarting HAProxy, Nginx, Redis, or some other open-source service we build on.

Failure responsibility is shifting to developers

Much like how a primary care physician refers patients to specialists, operation engineers now routinly defer to developer teams when responding to an incident. In today's world of heterogeneous services, the best an oncall operation engineer can hope for is isolating a failure to a particular service. From there, a developer is required to modify the application code.

Enter observability

Monitoring tells you whether the system works. Observability lets you ask why it's not working.

— Baron Schwartz (@xaprb) October 19, 2017

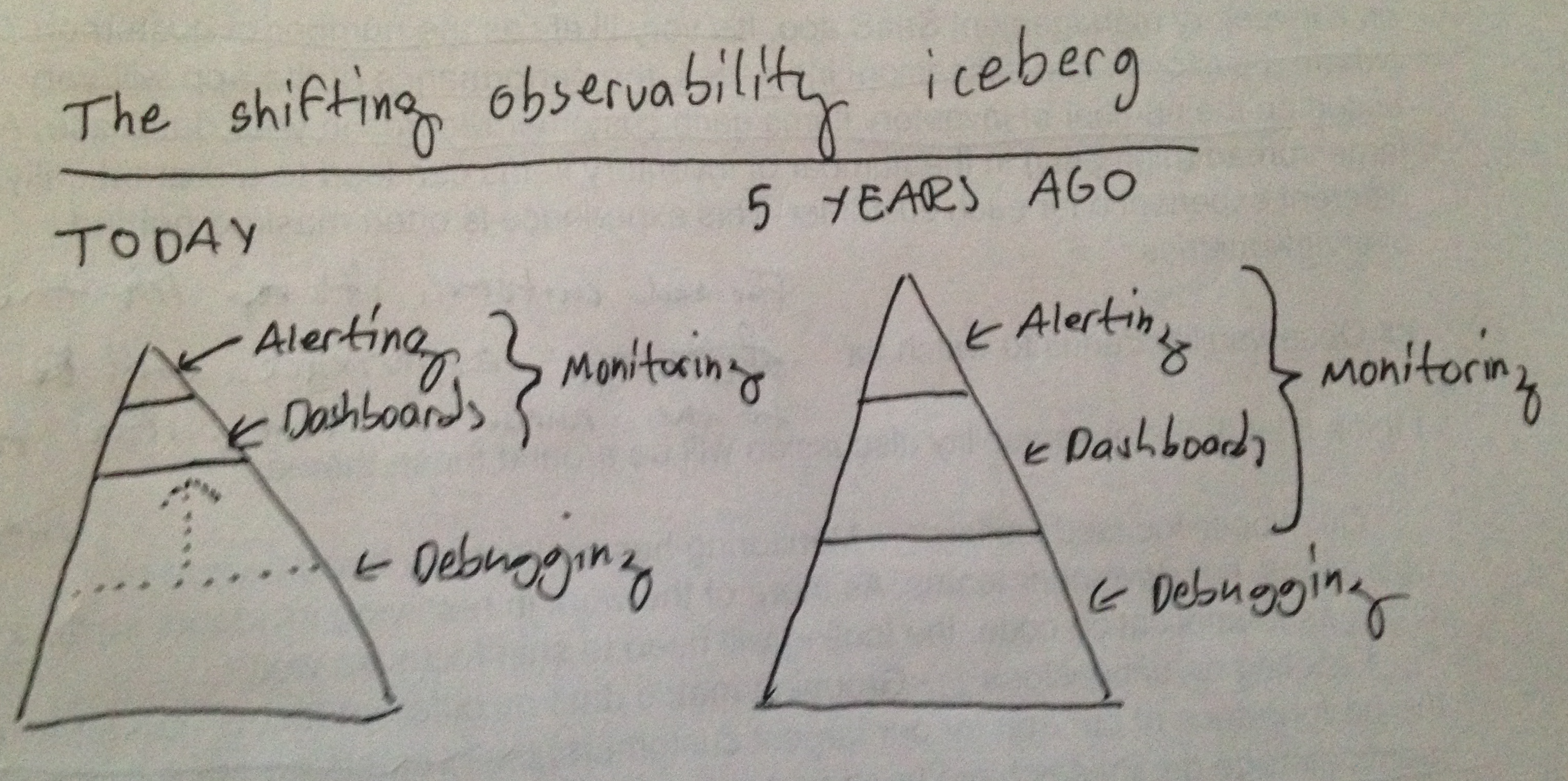

In Monitoring in the time of Cloud Native, Cindy Sridharan views monitoring (alerting and overview dashboards) as the tip of the observability iceberg with debugging beneath it. We've had alerting, dashboards, and debugging tools for decades, so why are we now deciding this needs an overarching name?

If we look at where the time is spent resolving a performance incident as the iceberg, a greater percentage of the iceberg is now in debugging:

As the composition of monitoring and debugging has shifted, we need an overarching container to describe the parts. This term is observability.

I think we see evidence of this is in the tooling that emerged first at large players that provide better debugging and or platforms that make it easier to understand the moving parts of distributed systems (Google's Dapper, Facebook's Canopy+Scuba, and Twitter's Finagle).

It helps me to understand the relationship between the two parts of observability by asking these questions:

- Monitoring - are you alerted on known failures and do you have a good starting point after receiving an alert?

- Debugging - are you able to quickly isolate a problem using as few team members as possible?

Observability trends to watch for

I think a lot of the observability discussion will be around these themes:

- Developer-focused tooling - Monitoring has traditionally been owned by operations, not developer teams. As more of the work in resolving incidents shifts to changes to application code, the tooling will need to shift focus as well.

- Faceting on dimensions - Soft failures are isolated to a specific set of dimensions that can get lost on top-level monitoring views. However, grouping metric data by different facets (ie - show me the performance of our app for our largest customers) requires significant tradeoffs in terms of real-time availability, sampling, UI, and the amount of historical data that is stored.

- Integrated tooling - Determining the level of integration between logging, metrics, and tracing.

- DRY'ing up observability code - Having observability on a web request likely means it contains some level of logging, metrics, and tracing. Writing three separate pieces of code for this is painful. Concepts like OpenTracing may evolve to address this.

TL;DR

The tradeoff for more resilient, soft-failing software systems is more complex debugging when things go wrong. As these problems are now more likely to reside deep in application code - which wasn't the case not along ago - observability tooling is playing catchup.